Security and Human Behavior 2025 Day 2

Security and Human Behavior live blog.

See also:

Contents:

- Session 5: Wickedness 2 - Sunny Consolvo, Jonathan Lusthaus, Michele Maasberg, Tom Meurs, Kieron Ivy Turk, Anh V. Vu

- Session 6: How people think - Sharmin Ahmed, Zinaida Benenson, Judith Donath, Serge Egelman, Ryan Shandler, Varad Vishwarupe

- Session 7: Governance and Law Enforcement - Vaibhav Garg, Eliot Lear, Jon Lindsay, Harshini Sri Ramulu, Sunoo Park, Josephine Wolff

- Session 8: Security and Safety - Joseph Da Silva, Damon McCoy, Felix Reichmann, Bruce Schneier, Adam Shostack, Frank Stajano

- Overall themes - Kami’s view

Session 5: Wickedness 2

Sunny Consolvo, Jonathan Lusthaus, Michele Maasberg, Tom Meurs, Kieron Ivy Turk, Anh V. Vu

Sunny Consolvo

Research into technology-facilitated abuse. Google’s at risk research program works on the wicked problem of such abuse.

Wicked problems are ones for which there may be no perfect solution. Instead for such problems it is better to improve the current state and keep working to improve the state for users, even if each step is incremental.

User States Framework (Mathews et al., ToCHI, 2025)

- Prevention - Minimize exposure

- Monitoring - Watch for signs

- Active Event - Stop or respond

- Recovery - fix damage, determine what happened, cope

When it comes to technology for broad use, creating safe technology for everyone is a wicked problem.

Supporting the Digital Safety of At-Risk Users: Lessons Learned from 9+ Years of Research & Training

Jonathan Lusthaus

Reflecting on security behavior as a sociologist.

Interviews of all sorts of people including law enforcement and criminals around profit-driven cybercrime. From the interviews it became clear that there are hot-spots for cybercrime. Some countries or locations are more likely to have such activity. The criminal groups in some places treat it like a business, they have office space, they go to work, they are friends with some of their colleges. The position of major hubs of cybercrime do not shift much. They are surprisingly stable.

Case study research to look at the model cybercrime in how they structure their work and how stable it is. The answer is that it is quite stable. Once a crime group picks an approach like ransomware, they tend to keep using it for years.

Proposed definition of cybercrime: “Crime that makes use of technology in a significant way.”

Michele Maasberg

Title: Authenticity under adversarial conditions

Interested in detecting falsified or manipulated signals using computational or cryptographic methods.

Interesting cryptographic problems that were left by people. There are several unsolved cases that feature an encrypted message. These are challenging to solve because not only do we not know the key, we don’t even know the algorithm used. One way to approach the problem is to look at the person who wrote the cipher. Looking at a sculpture at the NSA, it was created by an artist. Looking at the artist themselves makes it clear what set of possible algorithms and types of approaches the artist might have used.

Tom Meurs

Title: Disrupting Ransomware Groups: Modeling Leaksite Data

Ransomware will use cryptography to encrypt all the files, sometimes changing filenames to things like file.CONTI.

But defenders started using backups more. So criminal groups started moving to blackmail where if they are not paid the attacker will publish the files. Threatening to leak the data increases the victim’s willingness to pay significantly.

A leak site will not stay up indefinitely. They are sometimes taken down by law enforcement, other groups could attack it, or other things cause them to go down.

Law enforcement does all sorts of things like freeze assets, create decryption soltuions, and arresting people.

Internal disputes also cause disruption to attackers. They do things like claiming law enforcement took the site down, leaking chats, and doxing of other hackers.

We find that internal dispute has a large impact on if a group stops. Though law enforcement intervention also has strong impact. But doing that attribution is challenging. Issues like what does “stop” mean? A group might re-form or otherwise change what they look like.

Kieron Ivy Turk

Title: Threat me right: A human HARMS threat modeling devices for technology abuse

Lots of ways to do threat modeling:

Privacy Threats:

- LINDDUN

Technical Attacks

- CIA

- STRIDE

But what about things like intimate partner abuse? Where are the threats there?

Developed the Humans HARMS Model.

Investigating IoT Abuse. Had normal users learn about IoT devices and then brainstorm how to use these devices in an unexpected and possibly problematic way. Thought-about attacks tend to focus on existing features rather than “hacking” which might try and adjust how features work.

Threat me right: a Human Harms Threat Model for Technical Systems

Trying to test misuse and abuse in real life is challenging for many ethics reasons. So instead they are experimenting with applying them in existing real-life games. For example, Cambridge has an “assassin” game where participants try and “assassinate” a target via a blunt object with the word “knife” written on it. The researchers tried adding air tags into this game including the anti-stalking software to see if they helped the

Anh V. Vu

Title: Assessing the Aftermath: Tbeh effect of a global takedown against DDOS-for-hire services

A booter service (DDOS-for-hire) is an online service that can be paid to send a very large amount of traffic to the choice of victims. These services are well designed with things like APIs.

There has been a global attempt to take down such booter services.

The research looked at traffic going to the taken down page. And also Telegram channels. Global attack records.

Booters quickly resurrect. So even if they are taken offline, they are sometimes back within a day. Though it can also take longer and getting the customers back can take a bit longer.

Looking at the traffic that was sent to a website for the booter that had been taken down it is possible to track the impact of each takedown.

Session 6: How people think

Sharmin Ahmed, Zinaida Benenson, Judith Donath, Serge Egelman, Ryan Shandler, Varad Vishwarupe

Sharmin Ahmed

Title: Made-up email address

We are asked for email addresses everywhere, even when it makes no sense. For example, airport wifi. One self-defense approach is to input fake email addresses as a way to protect privacy.

Through interviews they find that people make up email addresses in cases like:

- Registration for coupons, samples

- Participation in campaigns, such as a advertising campaign at the mall

- Interaction with untrustworthy

Also:

- Maintaining a privacy boundary is a big reason for making up the email address.

- Managing information overload also an issue. Giving out an email address means more emails. Unsubscribe link does not work. Even calling doesn’t stop the emails.

- Weigh benefits. For one-time interaction they make up the address, but if there is more benefits they may give a real address.

Social centric influences

- Conforming to social norms - when asked to give address it seems akward to say “no” so instead they invent one.

- Navigating guilt and self-consciousness

Threat models around the email address

- Potential unwanted disclosure

- Risk of email address sell out

- Protection from hacking and security breaches

Mental models about email addresses

- Avoid consequences to others - They make up very different emails to avoid accidentally sending to a real person.

- Avoid personal repercussions -

- Assumptions about what makes an email address -

When people make up email addresses they do seem to modify their existing one. Like combining two existing emails. So the emails are not random characters. They are fake addresses that might actually be real. This may be leading to the issue of misdirected emails.

Zinaida Benenson

Title: Achieving Resilience: Data loss and recovery on devices for personal use in three countries

Loosing data is a common and problematic experience. This work looked at data loss in three countries. A survey was run. About half of the participants had lost data, most of them 3 or more years ago. Unsurprisingly, most people found the situation stressful. The most stress was experienced by those who had a backup, which should be good, but getting data restored from the backup was challenging. The most important factor that causes people to setup a backup is a prior loss experience.

Few people used third party software or network storage. More often external drives were used. Some people also backup via email. People who are younger do less backup, and older more. Though that is reversed for backing up a phone where younger people were more likely to backup.

When backing up users favor backing up content over backing up “functionality” like the operating system.

For those that never backup there are a range of reasons. Some think it is unnecessary because nothing has ever happened to them. Or that think that they have nothing important on the device. But a big reason is that they thought backup would be too complicated.

Judith Donath

Title: Thinking about how we think about AI

Metaphors we Live By, authors George Lakoff and Mark Johnson

Metaphore exist in many aspects of our lives. Even language itself is a form of metaphor. Metaphors shape how we think about abstract concepts. The word “argument” for example, in English is often framed in terms of war. You say things like “I demolished his argument”. IF we thought of an argument more like dance how would that change the framing of an argument and how we think about it.

The “desktop” metaphor is based off the concept that a computer is for a worker that works at a desk. This framing as a computer as something that sits on or replaces your desk heavily shapes how we think about and use the technologies.

Move forward to AI. The idea of “chatbots” is framed around it being human-like. The language is very anthropomorphic. A Google search for example has no anthropomorphic elements. But a LLM chat box starts with “How can I help you today?” adds an anthropomorphic element.

Computing machinery and intelligence - A. M. Turing

Some of Turing early work frames human thinking around faking being a human.

Well are there other metaphors we could use?

If we look at human learning there are other metaphors that people could use that reference learning instead. Such as written documents and libraries. A library requires solving the problem of search. There is so much information, finding the information needed in this space. Google focuses on that problem of finding. If we thought about AI as what is the next step beyond the library. So now we don’t find, instead we think about how information/knowledge is presented. This metaphor is tool-based rather than a metaphor of a human.

Serge Egelman

Title: Anonymity, Consent, and Other Moble Lies: An exploration of the data economy

IAPP textbook definition of anonymous data and deindentified data. Anonymous data is impossible to identify people, but dedentified means that is unlikely but could theoretically happen.

Unique identifiers can persistently identify that person. Such unique phone identifiers. The ICO similarly states that device IDs are personally identifiers.

BUT in courts, companies are arguing that things like the Google Advertising ID is not tied to a user identity or tied to a user.

Claims that hashed IDs cannot be reversed. But if you want to know if a hash is a specif email, it is an easy lookup.

It gets even worse when things like gender is hashed…. which with a bit of thought shows that there is a fairly small set of possibilities creating not many possible hashes.

No, hashing still doesn’t make your data anonymous

So the research team bought some anonysmized data. They were able to reverse 97% of the hashed emails. Then the researchers emailed the addresses asked if they consented. Most people could not remember consenting to this.

Ryan Shandler

Looking at how user interfaces impact human decisions.

Varad Vishwarupe

Title: Mind in the Loop: How AI companions Shape Human Behavior

Humans rely on chat bots. Sometimes those engagements are more emotional based and some are more productivity based. The more emotion-focused ones are AI companions that try and do things like replace human connection.

Session 7: Governance and Law Enforcement

Vaibhav Garg, Eliot Lear, Jon Lindsay, Harshini Sri Ramulu, Sunoo Park, Josephine Wolff

Vaibhav Garg

Community-based governance.

Eliot Lear

Title: Alice & Bob don’t live here anymore

Apple and the UK had a dispute around encryption which resulted in… BBC: Apple pulls data protection tool after UK government security row

The talk is inspired by the Apple/UK event and a reflection on how things got here and what we can do about it.

We, software people, have a catastrophe type model for security. But that means something different to software people, government, and the general population. There are also an issue of credibility. Despite much evidence, some still dogmatically keep and perpetuate views that contradict evidence. Which makes the experts seem to be in disagreement when in truth most do agree. All of this makes it harder to engage in the policy-creation dialog.

These points remind me (Kami) of Dr Sasse’s earlier point about how there is no garbage collection for outdated advice. We used to suggest that passwords be changed every month. That is no longer good advice, and there is much evidence on that point, but the old now inaccurate advice is never cleaned up and therefore persists.

Jon Lindsay

or “tell me about a complicated man, Muse, tell me how he wandered and was lost”

Age of Deception Cybersecurity as Secred Statecraft by John R. Lindsay

Very entertaining linking of modern security to “traditional military interactions” aka old stories like the Iliad and Odyssey.

Harshini Sri Ramulu

Unintended consequences: unforeseen outcomes of purposeful actions (development decisions)

There are many ways software can be used in a way that was not originally intended or not provide properties users want. These unintended consequences can negatively impact software uptake along with other issues.

Interview was done of developers to understand their views.

Many are driven by personal motivations to do good. That said, many developers are reactive where they wait for users to raise issues. Which can result in the solution only coming into existence after harm has happened.

Participants also tended to view their intentions to be good and therefore they did not see or believe that the software could or would be used for harm.

Developers also have no control over some aspects of their software. Open source, for example, means that people can use it freely which can also mean that groups a developer may not approve of will use it.

Lack of awareness of unintended consequences

Recommendations

- Technical debt

- High ethical debt

- Harms vulnerable groups by solving problems in hindsight

- Security tools abandoned resulting even lower security

- Developers balance different roles and priorities

- Lack of incentives to avoid the shift of risk to users

- Ethical review of software should happen similar to other review

Security and privacy software creators’ perspectives on unintended consequences

Sunoo Park

Title: The Case of EncroChat: A Real-World law-enforcement hack

EcroChat was a communication service provider that offered modified Android smartphones that had End-to-End encryption messaging built in. The phones were designed to be hardened against forensic examination.

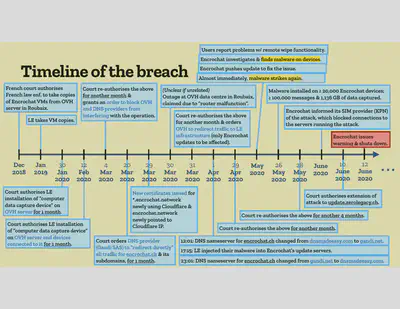

Authorities collected some EncroChat VMs. Most were encrypted, but a few were not… The non-encrypted ones had the keys for the others. Other VMs was decrypted via dictionary-based password guessing. Authorities then uses approaches such as DNS provider redirection and changing of certificates. Authorities then used these capabilities to inject malicious code onto EncroChat phones which started collecting things like chat message content and the phone unlock passwords.

The above diagram was presented as a detailed breakdown. I can only imagine how much time went into bringing together the dissperate sources of information required to construct this chart.

Josephine Wolff

What happens after large cybersecurity events? Issues like lawsuits, claims, and what do governments do.

There are some things that governments do that no one else can do. There are also some interesting competitions such as the Cyber9/12 for students exploring how to handle policies around cybersecurity incidents.

Lots of issues like cryptocurrency

Most individual solutions are not very effective on their own. But the picture is likely different when seen more wholistically. And how do we think about or structure such an understanding.

Indictment - Fascinating public indictments from the Department of Justice including lots of detail around hackers.

Economic espionage - Equifax breach

Technical takedowns have a fascinating progression. The activity by state-backed actors allows much more aggressive take-down activity.

Session 8: Security and Safety

Joseph Da Silva, Damon McCoy, Felix Reichmann, Bruce Schneier, Adam Shostack, Frank Stajano

Joseph Da Silva

The purpose of the CISO.

Security is seen as being mysterious and untractable. Comment about how CISOS speak in “hieroglyphs” which also makes it hard for people to challenge us.

Cybersecurity also uses lots of moral-laden terms like “rogue states”, “gangs”, “warfare”. This conceptualization impacts how we approach the issue. “Suspect communities” within our own organizations. Conceptual focus on finding people who do the wrong things.

Acceptance of surveillance by governence as something that just happens and is not avoidable.

CISOs see themselves as “guards” but also as “heroes” who keep the attackers out of the organization.

Cybersecurity is rife with the use of male words that align with masculinity. Security is “hard” or “soft”. We talk about “breaches” instead of “data loss”. User issues are “fluffy” as opposed to “hard controls”.

Protection expertise and domination: cyber-security masculinity in practice

Damon McCoy

Interesting thoughts about “harm mathematics” and how the community should think about harms.

Bruce Schneier

AI and security. Latest book coming out on AI and democracy.

People will use and trust AI systems fairly broadly even if they are not trustworthy. And trustworthy AI may become a problem of cybersecurity. Which is problematic. Issues like black box which makes it hard to understand what is going on internally. The new problem here though is Integrity.

Integrity traditionally is about not modifying data. We could think about data being correct and accurate when collected and then through all its usages, combinations, modifications. We have current integrity systems like “undo” and “reboot” which brings systems back to known good states. Digital signatures are an integrity measure. It is also about ensuring that something is not missing. Such as detecting dropped packets. Checksums. Tesla manipulating odometer readings is an integrity attack.

Exposing personal data on a site is a confidently issue even if no one accessed. Possible edits to a system is an integrity attack even if no one modifies.

Prompt injection and stickers on signs that impact AI are integrity attacks. Misinformation is an integrity attack.

Looking at it this way, integrity is important for AI. Integrity is critical for these systems or everything falls appart.

CIA security definition. Initial early web was very focused on availability. Web 2.0 is all about confidentiality. All the login and encryption. Web 3.0: distributed systems, IoT, AI agents, all of this requires verifiability trustworthy computations and data. All of it requires integrity.

Integrity will become the key security issue of the next decade.

This is a call to research. We need serious look into verifiable sensor. How do we audit AI output. What does an integrity breach look like? How do we identify one. How do we recover from an integrity failure.

We have a language problem. Integris is the state of integrity. The dictionary claims the word is obsolete.

Conclusion: We should all think about Integris system design.

Adam Shostack

Title: Cybersecurity Public Health

Public health as a resource for cybersecurity. Not as a metaphor, but instead as a set of tools and approaches which could be adapted by security and human behavior.

Public health (not necessarily individual doctors)

- Focus on health of populations, not individuals

- Measure harms, their prevalence and incidence

- Measure interventions

There are some nice tie-ins with presentations at SHB.

- Simon: “Is there a role for usability researchers to negotiate interventions?” - The need for interventions is clear.

- Sunny: “What is the #1 thing to do?” - hard to answer because we need other answers: what is the threat?

- “Why do we have such different numbers about basic security issues, such as what percentage of breaches come from users clicking links.”

Belief models as toolkits: Health belief model (individual perceptions, modifying factors, likeability of action). Health has many tookits for each of these.

Cyber belief/threat prevention model. Which translates well out of health. Helps explain why CISOs were having trouble responding to Log4J.

Cyber Public Health Workstream (Shostack + Associates)

A Cyber Belief Model Technical Report 23-01, CyberGreen

Rebekah Overdorf

Title: The battle for Information Integrity and why there is no simple fix

Malicious actions on social media. The goal is normally content inflation. Getting more people to see your content, and there are many ways to do it.

People doing bad stuff try and hide what they are doing.

Lots of bots tweet with a keyword. Which land it on a trending topic. Then all the tweets deleted at the same time. At the time users could report tweets but not trends.

Once tweets deleted the account adjusts itself. Suddenly new screen name and the account re-brands.

Bots are also not bots all the time. Some are (temporary) compromised accounts. The account is compromised and starts tweeting something and then immediately deleting the tweet to prevent the real user from noticing.

Ephemeral Astroturfing attacks: The case of fake twitter trends

Frank Stajano

Title: Security of programmable digital money

Thoughts on how to attack digital money automatically. Ideas around things like smart contracts. Where attacks and counter-attacks can be done automatically, fast, and at scale.

The 2025 Bybit Heist - done through spear phishing and then forging what the UI the victim was seeing.

Technology changes very fast. Humans behavior changes very slowly.

Industrial revolution outsourced labor to machines. Computers outsourced data to machines. AI outsources decisions to machines. Which opens some obvious ethics concerns.

Liability: there is also a question of liability around AI decisions. Right now it looks a bit like liability dumping, particularly liability being dumped on individuals like customers and employees.

Microsoft encourages the use of Copilot but also also makes makes them click through a dialog stating that they are individually liable for any copyright issues from using Copilot.

Individuals who delegate (to machines, employees, etc.) must be held responsible.

But attackers will attack precisely where the human authorizes the subordinate.

Overall Themes (Kami’s views)

My personal views about some of the high-level themes I noticed across the presenters.

Metaphors and framing

Quite a few presenters touched on the issue of metaphors. Judith Donath pointed out that metaphors shape our thinking and how we approach problems. We think about security differently if it is framed as a war as opposed to a dance or even live improve. Language impacts our solutions as well as who we choose to solve the problem. Hiring retired generals is a good idea if you are solving war problems, but maybe not if you actually want resiliency, for that you might want an Engineer.

Security also tends to use war and military metaphors. We “attack” and “defend”. These metaphors are also often masculine-based. Security controls can be “hard” or “soft”. An attacker “penetrates the firewall”.

The issue of metaphors is also a barrier for human behavior research because “attack” and “defend” thinking does not necessarily well map to issues like “supporting” staff. In the military the troops can be ordered to do certain actions. But that model does not always work well in a corporate environment.

Data integrity and authenticity

Bruce Schneier focused on the idea of integrity and how this new age of AI means that we will or at least should start caring more about the integrity of data and the source of recommendations. I agree, though I also think we need to worry more about authenticity and being able to trace with high accuracy the sources of the data and the sources of inputs.

Computer generated data is also being used in court cases. Raising an issue of if and how the courts can trust the data (Steven Murdoch). Cases like EncroChat (Sunoo Park) are based entirely on computer data. And worse, some of the data is generated on attacker-owned computers which were then accessed. Trust of such data is not obvious.

Propitiatory programs also make integrity a hard problem. How does one prove if a program is biased or inaccurate without the ability to examine the program itself. This issue also extends to “black box” systems like AI where even if the model is public, reasoning about its internal structure is prohibitively hard.

Arguments over data interpretation in courts is also interesting and complicated. Serge Egelman pointed out how in one case “hashed” was explained as fully protecting against de-identification, while the company’s own website advertised the ability to de-identify hashes. The integrity of data is not only in the data itself but also in forming a clear way that data processing can be interpreted.

Advice garbage collection

I loved this framing from Angela Sasse. Much early security advice was based more on intuition than on scientific processes or formal proofs. Decades of work have resulted to massive changes to what we now consider good advice and practice to be. For example, it is generally perfectly fine to write your passwords down on paper, connect to public wifi, and change your password only if it has been lost or stolen. Unfortunately the old – now disproven – advice continues to circulate with nothing to clean it up and replace it with what we now know.

This outdated advice not only wastes the time of employees, it can also find its way into policy and law. The conflict of new and old advice also makes the security community look unsure which can reduce public trust (Eliot Lear).