Security and Human Behavior 2025 Day 1

Welcome to the 18th Security and Human Behavior. The write-up below is a live-blog of the workshop.

Also see:

Contents:

- Session 1: Users and Usability - Andrew A. Adams, Florian Alt, Jonas Hielscher, Simon Parkin, Samantha Phillips, M. Angela Sasse

- Session 2: Wickedness 1 - Christian Eichenmüller, Alice Hutchings, Adam Joinson, Sameer Patil, Daniel W. Woods

- Session 3: Privacy and Transparency - France Belanger, Matt Blaze, Jan H. Klemmer, Steven Murdoch, Michael Specter, Kami Vaniea

- Session 4: Cybersecurity Work - Yasemin Acar, Bonnie Anderson, Laura Kocksch, Alena Naiakshina, Arianna Schuler Scott, Tony Vance

What is Security and Human Behavior? SHB is a workshop organized by Frank Stajano, Bruce Schneier, and Alessandro Acquisti. It brings together a wide range of researchers from many disciplines to discuss their latest work, interesting open questions, and generally talk about the state of security.

I personally like this conference because of the focus on important issues rather than a specific research paper. It allows for discussion of topics beyond what might normally be discussed or presented. For example, both Dr Egelman and Dr Murdoch this year shared their experiences with being expert witnesses for court cases and their opinions about how evidence is constructed and the role of computers and technologists.

Sesson 1: Users and Usability

Andrew A. Adams, Florian Alt, Jonas Hielscher, Simon Parkin, Samantha Phillips, M. Angela Sasse

Andrew A. Adams

Digital legacy - what happens to data about people when they pass away? There are interesting issues around who gets control and who gets to access our data when we pass away. Especially with governments and with use of things like AI who might keep using the data.

The issue is becoming larger as people who have significant digital footprints who are passing away. The siblings and family of older people who are passing away are themselves likely older and did not grow up with digital technology. They can only barely use their own technology usage; managing another person’s digital legacy they have inherited is challenging.

Double inheritance of digital data. One person passes away and then the person who handled that legacy themselves passes away. So now a third person is trying to handle the digital legacy of several people where some of the data has now been passed down.

Security is the final issue. Poorly studied with only 86 hits on Google Scholar for the search terms “post-mortem”, “security” and most are about post-incident reviews of security breaches. Very few identified papers actually cover the security of these accounts. There are important security implications about information the deceased person has of other people.

Companies are also providing ID for many other services. So when someone passes

All of that assumes that someone is actually dead. What happens security wise if someone who is not dead is claimed to be dead? We have seen incidents of where someone is attacked by getting them claimed to be dead which causes them to lose access to their data and sometimes their rights.

Florian Alt

Title: Reducing the effort of secure behavior through adapting to human states

Common human-centered attacks start with identity theft. Such theft could happen via things like guessing, social engineering, observation (shoulder surfing) and reconstruction attacks. These all have good theoretical solutions. But these approaches don’t support how people naturally interact with computers.

Behavioral Biometrics are one approach where how a user interacts with a device can provide information about identity. It can also be used to detect the human’s state. Are they angry? Are they stressed?

Things like passwords can be studied looking at things like gaze data to understand if someone is re-using a password as opposed to constructing a new one.

Schwarz, Jessica, Sven Fuchs, and Frank Flemisch. “Towards a more holistic view on user state assessment in adaptive human-computer interaction.” 2014 IEEE International Conference on Systems, Man, and Cybernetics (SMC). IEEE, 2014.

Jonas Hielscher

Title: Selling Satisfaction: A Qualitative Analysis of Cybersecurity Awareness Vendors’ Promises

Cybersecurity awareness training is a huge market. Set to hit $10 billion annually. BUT do they work?

KnowBe4: “There are over 8,500 local, state, and federal standards that your organization might need to implement […] to have a security awareness”

Started by asking security processionals what search terms they would use to find security awareness vendors. Then used these terms to find vendors that a security professional might consider when choosing what vendors to use. Research questions looked at things like what the promises the vendors were making.

“Think before you click.” Lots of vendors claim that most breaches come from clicking but they all have different statistics.

Marketing focuses on things like easy purchase, easy implementation, employee satisfaction, and handling certification and compliance. An example selling claim from a vendor: “[the employees fail-rates are] great ammo to get budget.” Focus also on phishing, getting numbers from fake phishing, providing reports, and externalities. Supprisingly little focus on actually training users to avoid security issues.

Phishing in Organizations: finding from a Large-Scale and Long-Term Study

Selling Satisfaction: A qualitative Analysis of Cybersecurity Awareness Vendors’ Promises

Simon Parkin

Title: Usable Security: Narratives and Negotiations

Recently been applying security awareness to economics. How are the people with decision making resources making choices? How do they think about security?

Security has lots of hidden costs for users. If a decision maker does not have belief that such hidden costs exist, they may ignore them when making choices. Which then puts the burden onto the user. Such managers are not necessarily experts in user experience. So how do we support someone who is not an expert in security or in human factors but may be causing lots of hidden costs for users.

In some ways we are asking a manager to invest money into doing a study to effectively prove that they picked the wrong system. This approach is unlikely to work.

Who has the power and the interest to put some of the existing usability research into practice? Users don’t have the ability to negotiate on their own behalf.

What is the real difference between: “Humans are the weakest link” and “Humans are the first line of defense”? But put the burden directly onto the user.

Samantha Phillips

Title Evaluating Organizational Information Security Culture Using Situational Judgement Tests

Build on organizational culture theories like: Hofsteade’s Organizational Culture Dimensions.

Building a survey scale to measure Level of Control. Response items aligns with the different known aspects of cultures as well as desirable vs undesirable aspects of security.

M. Angela Sasse

Title: The Cybersecurity Bonfire of Human Capital

Extensive effort into doing proper inter-disciplinary research with real companies.

The problem is that what is mostly found is that compliance work is mostly not very effective and largely inefficient. Bureaucratic security theater. The responsibilities theoretically owned by the top are being pushed to the bottom. “Responsibilisation of the least powerful stakeholders” often without asking if they have the ability to handle that responsibility.

The result is a huge bonfire of human capital with very little effective security in return.

“There is no garbage collection of inaccurate advice”. There are piles of very bad advice being given out by supposedly very skilled cyber security advisors.

No one tracks the operational costs of all the security advice and requirements.

NIST, NCSC and a pile of others have officially recommended not to change passwords constantly. Yet we still have piles of security advice telling people to change their password all time.

Final lesson: “If you are going to assign responsibility ensure the people you assign it to have the capability.”

- Where are we on cyber? - A qualitative study on Boards’ Cybersecurity Risk Decision Making

- Selling Satisfaction: A qualitative Analysis of Cybersecurity Awareness Vendors’ Promises

- [Noise and stress don’t help with learning: a qualitative study to inform design of effective cybersecurity awareness in …]

Session 1 Q&A

Who is producing this useless narrative?

The language used frames it as instructions and then the framing becomes blame.

The assumption of capacity is assumed. Example: why didn’t you apply for a 10 million dollar grant? Well I didn’t have the time I was focused on my security training. Oh, well that was ok. <- No one says anything like this, they skip the training.

Data after you die: how can we learn from other inheritance work?

Other parts of inheritance do look at these issues. The data issue does bring up the issue of “who is going to pay for this?” Some parts of inheritance involves lots of money and those get well funded. The data isn’t necessarily like that. So the support is not currently there and we need to think about what support should be there and convince companies to provide that support.

What should I do to prepare for death? Its tricky. How do you securely store the passwords. It is a challenge about how to give things like passwords to someone else that you can trust with your whole digital identity.

Session 2: Wickedness 1

Christian Eichenmüller, Alice Hutchings, Adam Joinson, Sameer Patil, Daniel W. Woods

Christian Eichenmüller

“It’s not enough to think about usability; you need to think about abusability too” – Ross Anderson

Data collected for one purpose often gets used for another purpose later. Which can lead to abuse of data. Function creep. Scale and scope.

Governments collect extensive data on people. That data collection is just growing. There is an impulse to collect it all, connect it all, and now inspect it all.

“The ability to turn technology against users.”

- at-risk/high-risk users

- Digital-safety

- Intimate partner violence

“Abusability paranoia” naturally comes up when people discuss security. Where people are just concerned that something might be abused but not clear about details.

Abusability testing is needed to help better understand the situation.

Alice Hutchings

Police officers are surprisingly often involved in cybercrime.

Cambridge Computer Crime Database

Lots of organizations have trouble with employees accessing sensitive data incorrectly. These are rarely reported often because the organization does not want the negative press.

Lots of these cases link to power and control. Things like surveillance or stalking. Finding a partner who is trying to leave but the database is used to locate that person using police-level access or other organization-level access.

All these issues are happening in the context of police asking for more access to encrypted data.

Adam Joinson

Online harassment is a serious issue for children and for adults. It results in serious mental harm and impact on ability to function online.

A bystander is someone who observes such harassment and has the ability to intervene. There has been a good bit of research on intervention in the physical space. Can we map some of that research into digital interactions.

People think about if they would intervene considering lots of factors such as how they know the victim. They worry about attracting harassment themselves. They are also not showing faith in social networking systems. The existence of moderators also might impact the willingness of users to intervene.

In studies they find that people were more willing to intervene privately via things like flagging the post then they were to intervene by posting themselves.

Interesting use of a choose-your-own-adventure game to have a person engage in a scenario in a more interactive way.

Sameer Patil

Generative AI is taking online studies instead of humans which is negatively impacting science. We treat the answers to online surveys as coming from a truthful human. But some of the answers are now coming from Generative AI. It is also sometimes copy/pasted from other parts of the web.

Detecting such generated answers is challenging. Especially given that nice software is now being produced to automate the whole survey experience.

How to handle the problem as reviewers is also challenging. Conference paper reviewers also need to become more aware of why so much data now needs to be dropped. It is also going to be challenging going forward to check that papers are checking for cases where an AI may be taking the survey.

Payments are also part of the problem. The problem is partially happening because participants are often paid. Ethics boards often ask that participants are paid regardless of if their answers are not used.

Daniel Woods

Title: Who you gonna call?

Digital forensics and incident response. These are related but different but also need to happen at the same time and involve overlapping data/systems.

Businesses want to get up and running again as fast as possible.

Insurer can sometimes provide “hotline incident response” where they help find a third party that comes to do incident response.

One of the largest costs of a breach: the lawsuit. The solution: involve lawyers in everything so that it is all attorney-client privilege. So the lawyers (not security experts) are directing the incident response.

Governments can be called, but they don’t tend to help unless it is major infrastructure impacted. There is no fire service equivlent that come and help put your systems back together.

Insurance for bullying online. These cover a surprising range. Including things like lawyers, psychology support, and possibly even relocation expenses.

Session 3: Privacy and Transparency

France Belanger, Matt Blaze, Jan H. Klemmer, Steven Murdoch, Michael Specter, Kami Vaniea

France Belanger

Thinking about organizational privacy. Compliance is again a large issue.

[Voices of Privacy]{voicesofprivacy.com)

Matt Blaze

Title: Making US Elections More Trustworthy

There are some unsatisfactory realities in US Elections:

- Serious vulnerabilities in infrastructure

- No credible evidence that these technical vulnerabilities have ever actually been exploited to alter the outcome of a US election

Most people believe one of these things.

In an election it is not enough to detect an error, we must prevent errors. So we can’t use security solutions from similar spaces like Banking where detection can be enough.

Technologists focus on: Is the outcome correct.

- Were the votes recorded correctly?

- Were they counted correctly?

The much harder problem is: increasing confidence in election outcomes by voters.

Jan H. Klemmer

Transparency in Usable Security and Privacy Research

Reporting all the relevant details needed to do things like assess validity of study and its results. If all the details are there, then there is an option for future independent validation of the work.

Transparency has a mix of incentives and drawbacks. Benefits are very indirect in that it helps other researchers. Drawbacks are that it takes quite a bit of time to construct extra documents for replication. It also leaves the researcher open to having their data analysis critiqued.

Steven Murdoch

The UK legal system is trying to decide how to best handle evidence generated by computers. Should computer-generated data be treated as truthful evidence? If we do not assume computer accuracy, then how do we prove that data or software is sufficiently valid. This problem of evidence validity is a challenging problem for the law.

Michael Specter

Tracking tags such as Apple’s Air Tag can be used to track people. There are also efforts by companies to notified people if a tag is following them around. Dr Specter’s work investigates such tags, how they work, and the impacts on people.

Kami Vaniea

Title: Phishing: collaboration between humans and AI

Phishing is an security technology that has already been integrating AI. Even before LLMs like ChatGPT became publicly available, the security community was using AI to identify and remove phishing and spam messages from emails. Despite that, phishing emails persist in getting through AI filters into inboxes.

Part of the issue is that Phishing is actually an authentication issue. We normally think of authentication as how people prove their identity to the computer (person sends password to Facebook to login). But authentication is supposed to be two directional, namely the person first needs to be certain they are on the correct page, and only then provide a password.

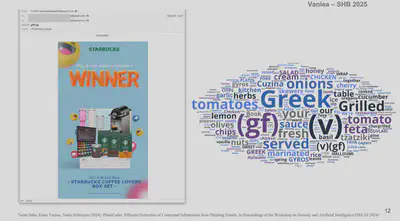

Phishing is particularly AI-hostile. Attackers try and show pictures to people and words to the automated analysis software. Below is the same email as it originally appeared (left) and what happens if all the words in the email are put in a word cloud (right).

Moving forward we need to think about how to put humans and AI into partnership. Not just make humans error-correct AI. But recognizing what humans do well that AI is bad at and how both groups can contribute.

Session 4: Cybersecurity Work

Yasemin Acar, Bonnie Anderson, Laura Kocksch, Alena Naiakshina, Arianna Schuler Scott, Tony Vance

Yasemin Acar

Ethics and morality in computer architecture.

Bonnie Anderson

Title: Neural Evidence of Generalization

Using neurophysical tools to study how people process security information.

Women in Cybersecurity. Women are interested because it is a growing field. There are opportunities to work in a more flexible manner. But they are also facing a lack of female mentorship. Not many visible women that work in these spaces.

Laura Kocksch

Title: Fragile Computing: How to live with insecurity?

There is a wide range of security of systems. Practical security can be a collected set of actions that keeps data safe in that situation matching their risks. Real security is a messy proactive problem and a collective craft.

“Security will always be imperfect.” But it can be challenging to come to terms with that and be ok with good enough security.

Alena Naiakshina

Scrum software development promotes collaboration. But its structure can lead to security being de-prioritized. There are also challenges around getting clients to list security as a wanted outcome.

Women Security Experts can face more challenges. Some men do not want to hear from a woman that they have security issues with their code. Gender bias can also result in worse security because the security advice is being ignored.

Arianna Schuler Scott

Interesting thoughts on how to build the cybersecurity talent pool.

Tony Vance

Title: Security and Organizational Complexity

Complex systems:

- More security bugs

- More modularity

- Harder to understand

- Harder to evaluate

- More interconnected

These principles might also apply to organization structure.

Events that impact company complexity like mergers can negatively impact security. Companies that merge multiple times a year are more likely to have a data breach.

“Complexity is the worst Enemy of of Security”: Studying Cybersecurity Through the Lens of Organizational Complexity How mergers and acquisitions Increase Data Breaches: A Complexity Perspective Taming Complexity in the Cybersecurity of Multihospital Systems: The Role of Enterprise-Wide Data Analytics Platforms